Intro into GCP IoT Core

We often get questions how Synpse is compared or competes with the GCP IoT Core service. The short answer is that they operate in slightly different domains. AWS IoT Core focuses on application connectivity of the devices while Synpse targets deployment of the applications that may or may not be using services such as IoT Core.

The best results are achieved when solutions are used together. For example, when you build an application locally that utilizes GCP IoT Core message broker or device state services and then use Synpse to distribute your application to thousands of devices.

Example application

In this tutorial we will deploy a simple open-source application that collect metrics and send them to AWS IoT Core for further processing. All code for this blog post can be found at:

All code for this blog post can be found at:

https://github.com/synpse-hq/metrics-nats-example-app - Sample metrics application https://github.com/synpse-hq/gcp-iot-core-example - GCP IoT Core example

Steps:

- Create GCP IoT core

- Configure data flow to forward results into Google storage account

- Create a GCP device for Synpse

- Demo Synpse application from 3 microservices -Metrics demo, Nats messaging, AWS IoT python forwarder containers

Technologies used

- Synpse - manage devices and deploy applications to them

- NATS - a lightweight message broker that can run on-prem

- Google IoT Core - message broker between all devices and GCP

GCP IoT Core

GCP Core works by creating a “device registry”. So this is one of the first steps we have to do.

Configure GCP IoT Core create registry

- Create pubsub topic for registry

gcloud pubsub topics create synpse-events

- Create GCP IoT registry:

gcloud iot registries create synpse-registry --region=us-central1 --enable-http-config --enable-mqtt-config --state-pubsub-topic projects/{project_id}/topics/synpse-events

- Create storage event

gsutil mb -l us-central1 -b on gs://synpse-events

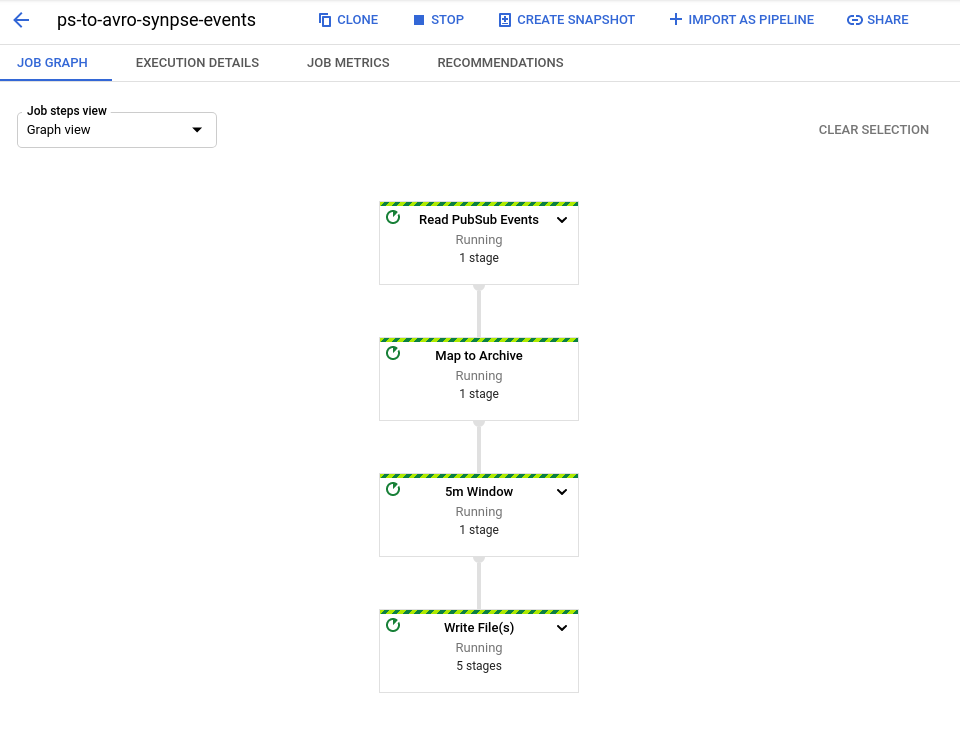

- Create dataflow job

gcloud dataflow jobs run ps-to-avro-synpse-events --gcs-location gs://dataflow-templates-us-central1/latest/Cloud_PubSub_to_Avro --region us-central1 --staging-location gs://synpse-events/temp --parameters inputTopic=projects/iot-hub-xxxxxx/topics/synpse-events,outputDirectory=gs://synpse-events/events,avroTempDirectory=gs://synpse-events/avro-temp

Data flow would look as bellow:

- Create a device

gcloud iot devices create synpse --region=us-central1 --registry=synpse-registry

- Generate certificate for our device:

openssl ecparam -genkey -name prime256v1 -noout -out ec_private.pem

openssl ec -in ec_private.pem -pubout -out ec_public.pem

- Add public key to GCP IoT Core

gcloud iot devices credentials create --region=us-central1 --registry=synpse-registry --device=synpse --path=ec_public.pem --type=es256

- Download google root CA:

curl https://pki.goog/roots.pem -o roots.pem

- (Optional) Test application

python gateway/gcp.py --device_id synpse --private_key_file ./ec_private.pem --cloud_region=us-central1 --registry_id synpse-registry --project_id iot-hub-326815 --algorithm ES256 --message_type state

Deploy an application

Deploy Synpse application. Modify application yaml with your thing endpoint.

- Create certificate secret

synpse secret create gcp-cert -f ec_private.pem

synpse secret create gcp-root -f roots.pem

Deploy the application:

synpse deploy -f synpse-gcp-example.yaml

where synpse-gcp-example.yaml is

name: GCP-IoT-Hub

description: Google Cloud IoT Core Synpse example

scheduling:

type: Conditional

selectors:

gcp: iot

spec:

containers:

- name: nats

image: nats

restartPolicy: {}

- name: metrics

image: quay.io/synpse/metrics-nats-example-app

restartPolicy: {}

- name: gcp-iot

image: quay.io/synpse/gcp-iot-hub-example

command: /server/gcp.py

args:

- --device_id=synpse

- --private_key_file=/server/ec_private.pem

- --cloud_region=us-central1

- --registry_id=synpse-registry

- --project_id=iot-hub-326815

- --algorithm=ES256

- --message_type=state

- --ca_certs=/server/roots.pem

secrets:

- name: gcp-cert

filepath: /server/ec_private.pem

- name: gcp-root

filepath: /server/roots.pem

env:

- name: NATS_HOSTNAME

value: nats

restartPolicy: {}

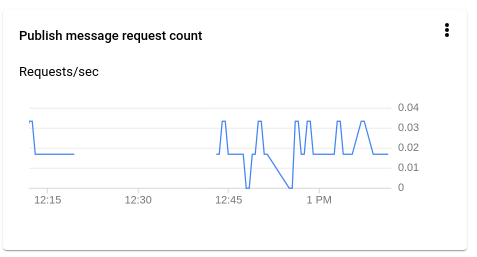

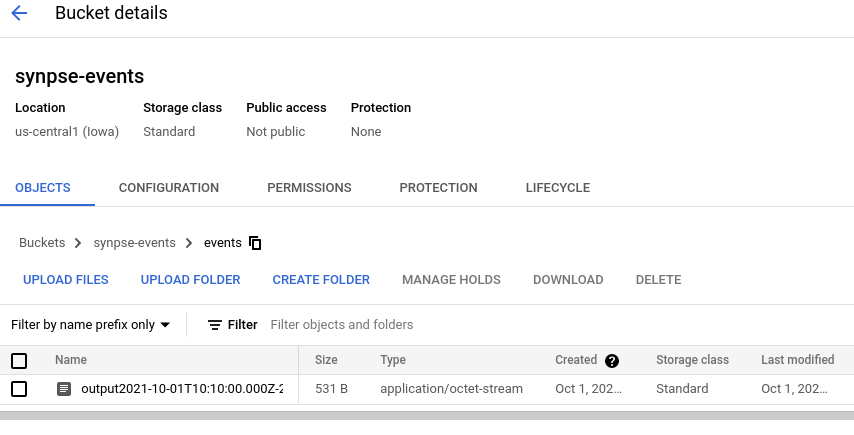

Once running, you should see the application running and data coming into the GCP storage account blob.

Things to look for

Google SDK, as with most of other GCP services, are very flexible. In some cases even too much. Simple example usages JWT, signed by GCP by certificates. This means application developer have to make sure JWT token is refreshed and valid. In addition to this there is expectation to “refresh” network events to SDK updates its endpoints. All this is fully understandable, but in authors opinion this should abstracted from user by SDK itself. Authentication and authorization and connection management should be out of scope of the SDK user.

Documentation about how to handle the data (status, events, config topics) is quite complicated. But despite the fact it being complicated it is understandable.

Overall we found GCP pleasant to configure and use.

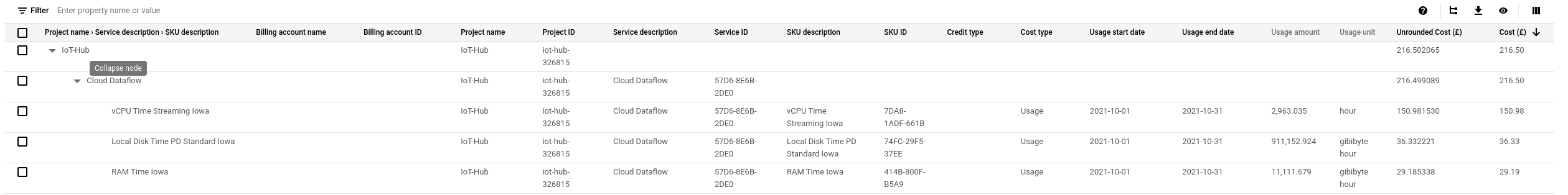

Important: We got 300$ invoice at the end of month for Dataflow. So be aware! This bit outrageous price when AWS and Azure same demo didn’t cost us more than 15$ in total!

Wrapping up

This is simple way to use Google IoT Core with Synpse. When it comes to consuming and managing a lot of data, constructing complex applications and integrating seamlessly into your current technological infrastructure - nothing can beat public cloud. But where cloud are lacking is - IoT device and application management.

Public cloud providers are built on assumption that they will manage infrastructure for you. When it comes to devices itself - they are yours and yours only. And this is where Public cloud providers lacks of influence and where Synpse comes into the picture.

If you have any questions or suggestions, feel free to start a new discussion in our forum or drop us a line on Discord